Continuous Randon Variables

Probability mass functions

Definition 1. The (probability) mass function (p.m.f.) of a discrete random variable $X$ is the function $f: \mathbb{R} \to[0, 1]$ given by $f(x) = \mathbf{P}(X = x)$.

Notice that $f(x)$ is not discontinuous. The distribution and mass functions are related by \(\begin{equation} F(x) = \sum_{i: x_i \leq x} f(x_i), \quad f(x) = F(x) - \lim_{y\uparrow x} F(y). \end{equation}\)

Lemma. The probability mass function $f: \mathbb{R} \to [0, 1]$ satisfies:

- the set of $x$ such that $f(x) \neq 0$ is countable,

- $\sum_{i} f(x_i) = 1$, where $x_1, x_2, \dots$ are the values of $x$ such that $f(x)\neq 0$.

Example. Binomial distribution. A coin is tossed $n$ times, and a head turns up each time with probability $p (= 1 - q)$. Then $\Omega = \{H, T\}^n \}$. The total number $X$ of heads takes values in the set $\{0, 1, 2, \dots , n\}$ and is a discrete random variable. Its probability mass function $f(x) = \mathbf{P}(X = x)$ satisfies $$\begin{equation} f(k)= \binom{n}{k} p^{k}(1-p)^{n-k}, \quad k=0,1, \ldots, n. \end{equation}$$ The random variable $X$ is said to have the binomial distribution with parameters $n$ and $p$, It is the sum $X = Y_1 + Y_2 + \dots + Y_n$ of $n$ Bernoulli variables.

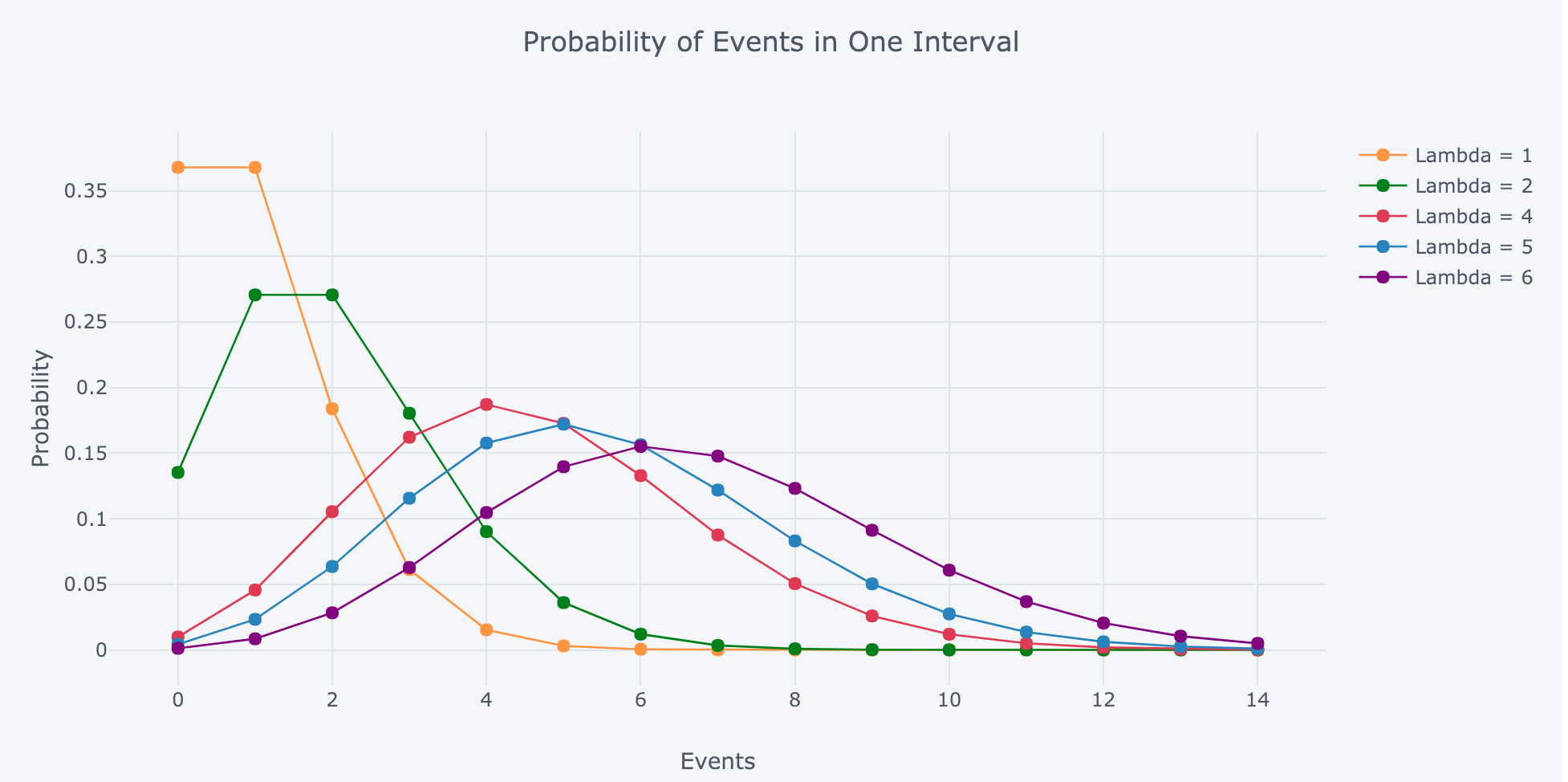

Example. Poisson distribution. If a random variable $X$ takes values in the set $\{O, 1, 2, \dots \}$ with mass function $$\begin{equation} f(k) = \frac{\lambda^k}{k!} e^{-\lambda}, \quad k = 0, 1, 2, \dots, \end{equation}$$ where $\lambda > 0$, then $X$ is said to have the Poisson distribution with parameter $\lambda$. Figure 1. shows how p.m.f varies with $k$.

Remark. The interpretation of Poisson distribution can be found via this link: The Poisson Distribution and Poisson Process Explained.

Independence of discrete random variables

Recall that events $A$ and $B$ are called independent if and only if $\mathbf{P}(A \cap B) = \mathbf{P}(A)\mathbf{P}(B)$.

Definition 2. Discrete variables $X$ and $Y$ are independent if the events $\{X = x\}$ and $\{Y = y\}$ are independent for all $x$ and $y$.

Let $A: = { \omega \;\vert\; X(\omega) = x }$ and $B:= { \omega \;\vert\; Y(\omega) = y }$. Then we can write $P(A) = f_X(x), P(B) = f_Y(y)$ and $P(A \cap B) = f(x, y)$. Therefore, $A$ and $B$ are independent if and only if $f(x,y) = f_X(x) f_Y(y)$ for all $x$ and $y$.

Remark. The equality $f(x,y) = f_X(x) f_Y(y)$ can be used as the criterion to determine whether two discrete random variables $X$ and $Y$ are independent or not. But we need to be careful with it when dealing with continuous random variables as there will be additional assumptions to determine the independence of continuous random variables using this equality.

Theorem 1. If $X$ and $Y$ are independent and $g, h: \mathbb{R} \to \mathbb{R}$, then $g(X)$ and $h(Y)$ are independent also.

More generally, we say that a family ${ X_i \;\vert\; i \in I}$ of (discrete) random variables is independent if the events ${X_i = x_i }, i \in I$, are independent for all possible choices of the set ${x_i \;\vert\; i \in I}$ of the values of the $X_i$ . That is to say, ${X_i \;\vert\; i \in I }$ is an independent family if and only if \(\begin{equation} \mathbf{P}(X_i = x_i \text{ for all }i\in J) = \prod_{i\in J} \mathbf{P}(X_i = x_i) \end{equation}\) for all sets ${x_i \;\vert\; i \in I}$ and for all finite subsets $J$ of $I$.

Probability density functions

Recall that a random variable $X$ is continuous if its distribution function $F(x) = \mathbf{P}(X \leq x)$ can be written as\footnote{This is just a general integral, $f(u)$ may or may not be continuous.} \(\begin{equation} F(x) = \int_{\infty}^x f(u)du \end{equation}\) for some integrable $f: \mathbb{R} \to [0, \infty)$.

Definition 3. The function $f$ is called the (probability) density function (p.d.f.) of the continuous random variable $X$.

Remark. The function $f$ is NOT unique. We can add some separate points or a countable set of points which has zero measure to $f$. This doesn't change the value of the integral. However, if $F$ is differentiable at $u$ then we shall normally set $f(u) = F'(u)$.

Next, we assume $f$ is continuous, then from the basic theorem of calculus, $F$ must be differentiable. Recall \(\begin{equation} P(a < X(\omega) \leq b) = F(b) - F(a) = \int_{a}^b f(u)du. \end{equation}\) Then $P(X=x) = F(x) - \lim_{y \uparrow x} F(y)$. $F$ is absolutely continuous for continuous random variables. Thus we have \(\begin{equation} \lim_{y\uparrow x} F(y) = F(x) \quad \mathbb{R}ightarrow \quad P(X=x) = 0 \quad \forall x\in\mathbb{R}. \end{equation}\) This means the probability of a continuous random variable $X$ taking value at a certain point is 0. Very roughly speaking, this lies in the observation that there are uncountably many possible values for $X$; this number is so large that the probability of $X$ taking any particular value cannot exceed zero.

The numerical value $f(x)$ is NOT a probability. Check the probability $\mathbf{P}(x < X \leq x + dx)$ for a very small $dx$: \(\begin{equation} \mathbf{P}(x < X \leq x + dx) = F(x + dx) - F(x) \approx f(x) dx. \end{equation}\) Since $dx$ is a very small interval rather a number, we cannot say $f(x)$ is the probability of something.

Lemma. If $X$ has density function $f$, then

- $\int_{-\infty}^\infty f(x)dx = 1$,

- $\mathbf{P}(X=x) = 0$ for all $x \in \mathbb{R}$,

- $\mathbf{P}(a < X \leq b) = \int_a^b f(x)dx$.

Independence of continuous random variables

Independence of general random variables

Definition 4. Random variables $X$ and $Y$ are called independent if $\{ X \leq x\}$ and $\{Y \leq y\}$ are independent events for all $x, y \in \mathbb{R}$.

Note that this definition is the general definition of the independence of any two variables $X$ and $Y$ regardless of their types. The independence of discrete random variables is included in this definition.

Recall the marginalization. If two random variables $X$ and $Y$ are independent, we have \(\begin{equation} \mathbf{P}(X\leq x) = \lim_{y\to\infty}F(x, y) \equiv F_X(x), \quad \mathbf{P}(Y \leq y) = \lim_{x\to\infty}F(x, y) \equiv F_Y(y). \end{equation}\) Therefore, \(\begin{equation} \tag{1} F(x,y) = \mathbf{P}(X \leq x, Y \leq y) = \mathbf{P}(X\leq x) \mathbf{P}(Y \leq y) = F_X(x) F_Y(y). \end{equation}\) Note that we are dealing with distribution function in Eq.(1). Eq.(1) can be used as the general criterion to determine whether two random variables are independent or not.

Independence of continuous variables

If $X, Y$ are continuous, then we have \(\begin{equation} F(x, y) = \int_{-\infty}^x \int_{-\infty}^y f(x,y) dx dy. \end{equation}\) \(\begin{equation} F_X(x) = \int_{-\infty}^x \int_{-\infty}^\infty f(x, y)dx dy \quad \mathbb{R}ightarrow \quad f_X(x) = \int_{-\infty}^\infty f(x,y)dy, \end{equation}\) where $f_X(x)$ is called the marginal probability density function of $X$. Similarly, we can define $F_Y(y)$ and $f_Y(y)$.

Assume $f(x,y)$ is continuous, then $F(x,y)$ must be twice differentiable w.r.t. $x$ and $y$. Therefore, from \eqref{eq:}, we can derive a *very practical criterion to determine the independence of two continuous random variables: \(\begin{equation} \tag{2} f(x,y) = \frac{\partial^2 F(x,y)}{\partial x \partial y} = \frac{\partial}{\partial x \partial y} F_X(x) F_Y(y) = f_X(x) f_Y(y). \end{equation}\)

Remark. Note that the prerequisite of Eq.(2) is that $f(x,y)$ is continuous.

Example. Uniform distribution. The random variable $X$ is uniform on $[a, b]$ if it has distribution function $$\begin{equation} F(x) = \begin{cases} 0 & x \leq x, \\ \frac{x-a}{b-a} & a < x \leq b, \\ 1 & x > b \end{cases}. \end{equation}$$ The density function is $$\begin{equation} f(x) = \begin{cases} \frac{1}{b-a} & a < x \leq b \\ 0 & o.w. \end{cases} \end{equation}$$

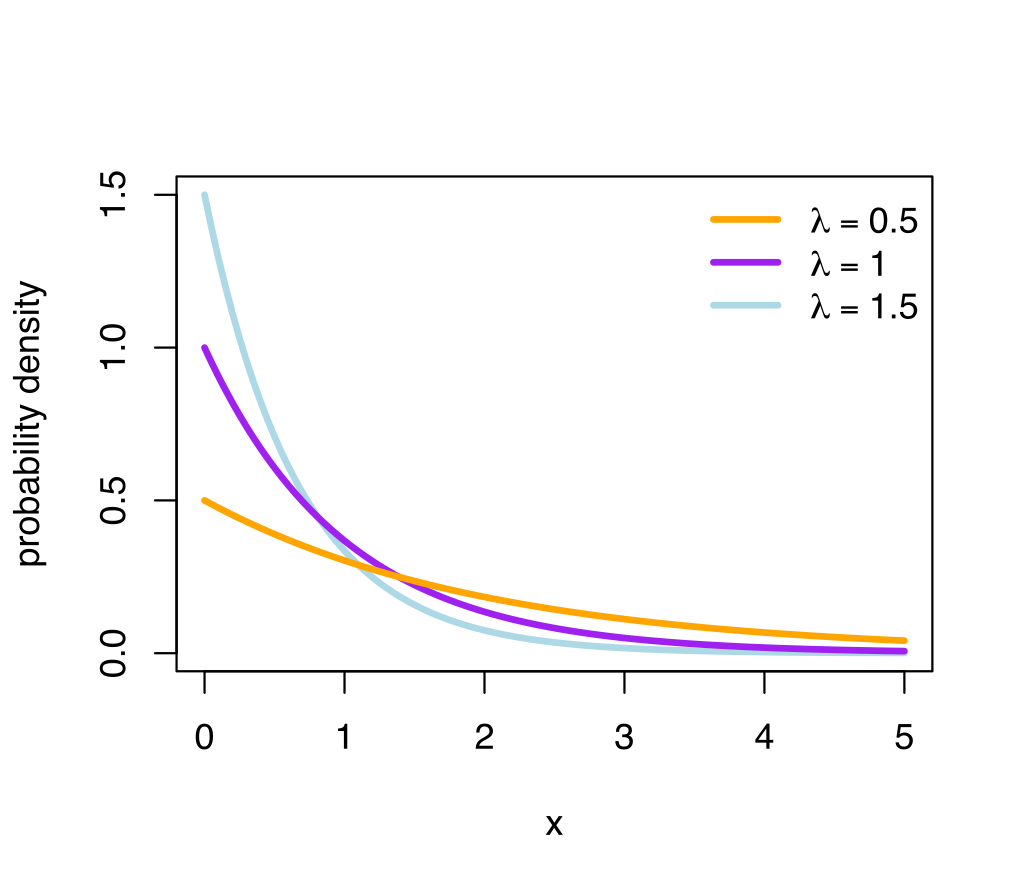

Example.

Exponential distribution. The random variable $X$ is exponential with parameter $\lambda(> 0)$ if it has distribution function

$$\begin{equation}

F(x) = 1 - e^{-\lambda x}, \quad x \geq 0.

\end{equation}$$

The density function is

$$\begin{equation}

f(x) = \begin{cases} \lambda e^{-\lambda x} & x > 0 \\ 0 & o.w.

\end{cases}

\end{equation}$$

Note that $F(x)$ is not differentiable at $x=0$. This means $f$ has discontinuity at $x=0$. Thus we need to choose some value for $f(0)$. It doesn't matter what value we choose as it doesn't affect the integral. Figure 2 shows the p.d.f. of Exponential distribution.

Remark. The interpretation of Exponential distribution can be found via Exponential distribution 1 and Exponential distribution 2. Pay attention to the connection between Exponential distribution and Poisson distribution.

Example. Normal (or Gaussian) distribution. The most important continuous distribution, which has two parameters $\mu$ and $\sigma^2$ and density function $$\begin{equation} f(x) = \frac{1}{\sqrt{2\pi\sigma^2}} \exp \left( -\frac{(x-\mu)^2}{2\sigma^2} \right), \quad x\in\mathbb{R}. \end{equation}$$ It is denoted by $N(\mu, \sigma^2)$. If $\mu=0$ and $\sigma^2=1$, then $$\begin{equation} f(x) = \frac{1}{\sqrt{2\pi}} e^{-\frac{1}{2}x^2}, \quad x\in\mathbb{R}. \end{equation}$$ is the density of the standard normal distribution. It is easy to generalize 2D case to multivariable case. Suppose $\mathbf{x} \in \mathbb{R}^n$, $\mathbf{\mu} \in \mathbb{R}^n$ is the mean vector and $\mathbf{\sigma}^2 \in \mathbb{R}^{n\times n}$ is the covariance matrix. Then $$\begin{equation} f(\mathbf{x}) = \frac{1}{\sqrt{(2\pi)^n} \det(\mathbf{\sigma}^2)} \exp \left( -\frac{1}{2} (\mathbf{x}-\mathbf{\mu})^T (\mathbf{\sigma}^2)^{-1} (\mathbf{x}-\mathbf{\mu}) \right), \quad \mathbf{x} \in \mathbb{R}^n. \end{equation}$$

Remark. For 2D case, if $\sigma^2$ is diagonal, then $f(x,y) = f(x)f(y)$. Therefore, $\sigma^2$ is a measure of independence. For multivariable cases, if $\sigma^2$ is diagonal, then all random variables are independent with each other.